Generative AI detection gap: It’s the shadowy, unsettling space where AI-generated content blends seamlessly with the human-made, leaving us questioning what’s real and what’s not. This isn’t just a tech problem; it’s a societal one, impacting everything from news credibility to the authenticity of images and videos. The rapid evolution of AI means the gap is widening, faster than we can develop solutions.

Think deepfakes so realistic they’d fool your grandma, or AI-written articles that slip past plagiarism detectors. The current tools for identifying AI-generated content are struggling to keep up. This isn’t just about catching cheaters; it’s about protecting ourselves from misinformation, manipulation, and a future where trust becomes a luxury.

Defining the Generative AI Detection Gap

Source: dreamstime.com

The ability to reliably distinguish between human-written and AI-generated text is proving to be a far more complex challenge than initially anticipated. While several detection tools have emerged, a significant gap remains, highlighting the limitations of current technology and the sophistication of modern large language models. This gap isn’t just a technical hurdle; it has significant implications for academic integrity, journalistic ethics, and the fight against misinformation.

Existing generative AI detection tools often rely on identifying statistical patterns and stylistic quirks associated with AI-generated text. However, these models are constantly evolving, leading to a frustrating arms race between detectors and the AI models themselves. The subtle nuances of human writing, including inconsistencies, grammatical errors, and unexpected turns of phrase, are becoming increasingly replicated by advanced AI, making detection increasingly difficult. Furthermore, the effectiveness of these tools varies wildly depending on the length and complexity of the text, the specific AI model used to generate it, and even the prompts used to generate the content.

Limitations of Current Generative AI Detection Tools

Current detection tools struggle with several key aspects of text analysis. They often flag human-written text as AI-generated due to their reliance on statistical probabilities rather than a deep understanding of semantics and context. Conversely, sophisticated AI models can now produce text that mimics human writing styles convincingly, evading detection algorithms that rely on identifying predictable patterns. This is particularly true when the AI is instructed to emulate a specific writing style or author. For instance, an AI instructed to write in the style of Ernest Hemingway might produce text that easily fools a detector that relies on simplistic statistical analysis. The limitations are amplified when dealing with creative writing, poetry, or code, where stylistic variations are far more pronounced and harder to quantify.

Discrepancies Between Human and AI-Generated Content

One of the biggest challenges lies in identifying subtle differences that are difficult to quantify algorithmically. While AI-generated text might be grammatically correct and stylistically consistent, it often lacks the nuanced understanding of the subject matter that a human writer possesses. This can manifest in inconsistencies in logic, a lack of original insights, or an over-reliance on clichés and predictable phrasing. However, these subtle inconsistencies are often difficult for current detection tools to reliably identify. Advanced AI models are learning to overcome these limitations, making the detection gap even wider.

Examples of AI-Generated Text Evading Detection

Consider an AI tasked with writing a news article about a local event. A sophisticated model could easily generate a grammatically correct and stylistically plausible article that accurately reflects the event’s details. Current detectors might struggle to distinguish this from a human-written article, particularly if the AI has access to and processes information from reliable sources. Similarly, an AI instructed to write a short story in a particular genre could produce a creative piece that mimics the style and conventions of that genre, potentially evading detection by mimicking human creativity. This points to the need for detection methods that go beyond simple statistical analysis.

Comparison of Generative AI Detection Approaches

Several approaches are used in generative AI detection, each with its strengths and weaknesses. Some rely on statistical analysis of word choice, sentence structure, and other linguistic features. Others employ machine learning models trained on large datasets of both human-written and AI-generated text. Yet another approach uses watermarking techniques, embedding subtle signals within the generated text that can be detected later. However, each method has its limitations. Statistical methods are easily circumvented by sophisticated AI, while machine learning models can be prone to bias and require constant updating. Watermarking techniques can be detectable and potentially compromise the integrity of the text. The ideal approach likely involves a combination of techniques, leveraging the strengths of each to improve overall detection accuracy.

Types of Generative AI and Detection Challenges

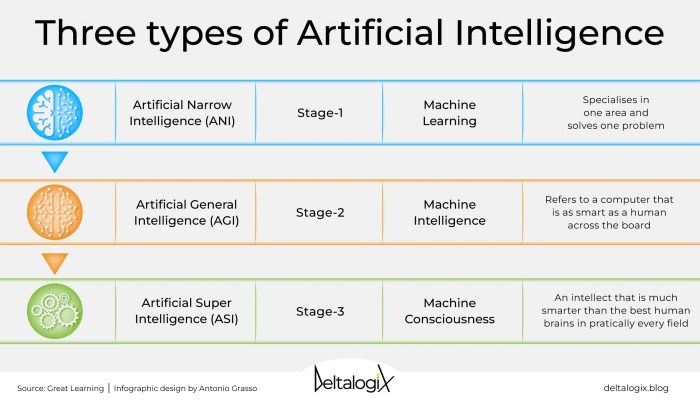

The rapid advancement of generative AI across various modalities – text, image, audio, and video – has created a complex landscape for detection. The core challenge lies in the ever-evolving nature of these models and the subtle nuances that distinguish AI-generated content from human-created material. Understanding these differences and the specific challenges posed by each modality is crucial for developing effective detection strategies.

Different generative AI models employ distinct techniques, leading to varying degrees of detectability. Text-based models, for instance, might exhibit patterns in sentence structure or vocabulary that differ from human writing. Conversely, image generation models can leave subtle artifacts or inconsistencies that betray their artificial origin. This variance in detectability across modalities necessitates a tailored approach to detection, rather than a one-size-fits-all solution.

The generative AI detection gap is a serious issue, leaving us vulnerable to sophisticated deepfakes and AI-generated misinformation. Think about it: the tech evolving so rapidly, even something as seemingly unrelated as the new features in the motorola razr and razr plus 2024 pale in comparison to the potential for AI-generated deception. This constant technological leapfrog makes staying ahead of the curve in AI detection a real uphill battle.

Text-Based Generative AI Detection Challenges, Generative ai detection gap

Detecting AI-generated text presents unique hurdles. While some models produce grammatically flawless text, they might lack the subtle nuances of human writing, such as stylistic inconsistencies, emotional depth, or contextual coherence. Successful detection methods often leverage sophisticated statistical analysis of text features, including word choice, sentence structure, and stylistic variations. Unsuccessful attempts frequently focus on overly simplistic metrics, neglecting the complexity of human language. For example, early attempts to detect GPT-3 output relied on identifying unusually high probabilities for certain word sequences, but this method proved easily circumvented by newer, more sophisticated models. More robust methods now analyze the overall coherence and stylistic consistency of the text, comparing it against a large corpus of human-written text.

Image-Based Generative AI Detection Challenges

Identifying AI-generated images is challenging due to the continuous improvement in image generation techniques. Early generative models often produced images with noticeable artifacts, such as blurry areas or unnatural textures. However, modern models generate highly realistic images, making detection significantly harder. Successful methods often focus on identifying subtle anomalies, such as inconsistencies in lighting, shadows, or textures, or the presence of repeating patterns that betray the underlying algorithms. Unsuccessful methods might rely on overly simplistic feature detection, failing to account for the ever-improving realism of AI-generated images. For instance, detection based solely on the presence of specific artifacts is quickly rendered obsolete as models improve. More effective approaches involve analyzing the statistical properties of the image data itself, comparing it against a database of known human-created images.

Audio and Video Generative AI Detection Challenges

Detecting AI-generated audio and video presents even greater difficulties. The sophistication of deepfake technology, for example, makes it incredibly challenging to distinguish between real and fabricated videos. Successful methods in this domain often incorporate advanced techniques like analyzing subtle inconsistencies in lip synchronization, facial expressions, or background noise. Unsuccessful attempts often rely on readily detectable artifacts, which modern deepfake generators effectively mitigate. For example, early deepfakes often exhibited unnatural blinking patterns, a feature that is now easily corrected in advanced models. More robust methods combine multiple detection techniques, including analyzing audio and visual features, and cross-referencing them against known datasets of real recordings.

Comparison of Generative AI Detection Methods

| Generative AI Type | Detection Method | Strengths | Weaknesses |

|---|---|---|---|

| Text | Statistical analysis of text features (e.g., word choice, sentence structure) | Relatively computationally inexpensive; can identify subtle stylistic inconsistencies | Can be easily circumvented by sophisticated models; requires large datasets for training |

| Image | Analysis of subtle anomalies (e.g., inconsistencies in lighting, shadows) | Effective against less sophisticated models; can identify repeating patterns | Difficult to apply to high-resolution, realistic images; computationally expensive |

| Audio | Analysis of inconsistencies in audio characteristics (e.g., lip synchronization, background noise) | Can identify inconsistencies in speech patterns and audio quality | Highly susceptible to sophisticated audio manipulation techniques; requires specialized audio processing tools |

| Video | Combination of audio and visual analysis; deepfake detection techniques | Potentially highly effective against deepfakes; can identify subtle inconsistencies | Extremely computationally expensive; requires large datasets and sophisticated algorithms |

The Evolving Nature of Generative AI and its Impact on Detection

The cat-and-mouse game between generative AI and detection tools is heating up faster than a viral TikTok trend. As generative AI models become more sophisticated, their ability to produce convincingly human-like text, images, and other media makes them harder to distinguish from the real deal. This constant evolution creates a significant and ever-widening detection gap, forcing developers of detection tools to constantly innovate to keep pace.

The rapid advancements in generative AI are directly responsible for this widening gap. New models are trained on exponentially larger datasets, utilizing more advanced architectures, and employing increasingly subtle techniques to mask their artificial origins. This isn’t just about incremental improvements; we’re talking about paradigm shifts in how AI generates content, leaving many existing detection methods struggling to keep up.

New AI Models Circumventing Detection Methods

The development of more sophisticated generative AI models has led to the emergence of techniques specifically designed to evade detection. For instance, some models incorporate “noise” or random variations into their output to disrupt the patterns that detection algorithms rely on. Others use techniques like adversarial training, where the model is specifically trained to fool existing detection systems. Think of it like a digital chameleon, constantly changing its appearance to remain undetected. Imagine a model trained to mimic the subtle stylistic nuances of a particular author; existing detection methods might struggle to differentiate it from genuine work. Another example is the use of advanced prompting techniques that guide the generative model to produce text that avoids triggering detection s or patterns. These methods aren’t just theoretical; they’re actively being implemented and refined.

The Generative AI and Detection Arms Race

The ongoing development of more powerful generative AI models is fueling an escalating arms race between AI developers and the creators of detection tools. It’s a technological tug-of-war, with each side constantly striving to outmaneuver the other. Generative AI developers are pushing the boundaries of what’s possible, creating models capable of producing increasingly realistic outputs. Meanwhile, detection tool developers are scrambling to develop new algorithms and techniques to identify these increasingly sophisticated fakes. This dynamic is likely to continue, with both sides constantly innovating and adapting to maintain their respective advantages. The outcome remains uncertain, but one thing is clear: the detection gap will continue to be a major area of focus and contention for the foreseeable future.

A Timeline of Generative AI and Detection Evolution

The following timeline illustrates the interplay between the evolution of generative AI and the development of detection methods:

| Year | Generative AI Advancements | Detection Method Advancements |

|---|---|---|

| 2014 | Early GANs (Generative Adversarial Networks) emerge, demonstrating the potential for realistic image generation. | Initial attempts at image forgery detection, largely based on analyzing image artifacts. |

| 2017 | Significant improvements in GANs and the emergence of transformer-based models for text generation. | Development of more sophisticated methods for detecting inconsistencies and anomalies in generated images and text. |

| 2019 | GPT-2 demonstrates impressive capabilities in text generation, raising concerns about potential misuse. | Research intensifies into more robust detection methods, including the use of machine learning for detecting stylistic patterns in generated text. |

| 2022-Present | Large language models (LLMs) like GPT-3 and beyond become incredibly powerful, capable of generating highly realistic and nuanced text, images, audio, and video. | Focus shifts towards developing more context-aware and adaptive detection methods that can account for the increasing sophistication of generative AI models. Research into watermarking and other proactive techniques gains traction. |

Ethical and Societal Implications of the Detection Gap: Generative Ai Detection Gap

The inability to reliably detect AI-generated content creates a significant ethical minefield, impacting everything from public trust to democratic processes. This detection gap isn’t just a technological hurdle; it’s a societal challenge with far-reaching consequences that demand immediate attention and proactive solutions. The potential for misuse is immense, threatening to erode the very foundations of truth and accountability.

The ethical concerns stem from the potential for widespread manipulation and the erosion of trust. When we can’t distinguish between human-created and AI-generated content, it becomes incredibly difficult to ascertain the truth, leading to a climate of uncertainty and distrust. This has significant ramifications for news consumption, academic integrity, and even personal relationships.

Misinformation and Deepfakes: A Perfect Storm

The detection gap fuels the proliferation of misinformation and deepfakes. Deepfakes, hyperrealistic manipulated videos or audio recordings, can be used to create convincing but false narratives, damaging reputations, inciting violence, and influencing elections. The ease with which AI can generate such content, coupled with the difficulty in detecting it, creates a potent cocktail of deception. For example, a deepfake video of a political leader making inflammatory statements could trigger widespread unrest and social division, even if the video is entirely fabricated. Similarly, AI-generated text could be used to spread false information about a company, causing significant financial harm. The lack of effective detection mechanisms allows malicious actors to operate with relative impunity.

Real-World Consequences of the Detection Gap

The consequences of the detection gap are already being felt. The spread of AI-generated disinformation during elections has raised concerns about the integrity of democratic processes. Academic institutions struggle with plagiarism detection as AI-generated essays become increasingly sophisticated. The legal system faces challenges in determining the authenticity of evidence presented in court. These examples highlight the urgent need for robust detection methods and ethical guidelines. The case of a fabricated news article about a celebrity’s death, widely shared online before being debunked, illustrates the speed and reach of AI-generated misinformation and the damage it can inflict. Another instance involved the use of AI-generated images to spread false narratives about environmental disasters, resulting in unnecessary panic and mistrust in official reporting.

Potential Solutions to Mitigate Risks

Addressing the risks associated with the detection gap requires a multi-pronged approach. It’s not enough to simply improve detection technology; we also need to focus on education, regulation, and ethical guidelines.

- Develop more robust AI detection tools: Continued research and development are crucial to creating more sophisticated and reliable AI detection technologies.

- Promote media literacy and critical thinking: Educating the public on how to identify and evaluate AI-generated content is essential to combatting misinformation.

- Implement stricter regulations on the use of generative AI: Regulations can help to prevent the misuse of generative AI for malicious purposes, such as creating deepfakes.

- Develop ethical guidelines for the creation and use of generative AI: Clear ethical guidelines can help to ensure that generative AI is used responsibly and ethically.

- Invest in watermarking and provenance tracking technologies: These technologies can help to trace the origin of AI-generated content and verify its authenticity.

Future Directions in Generative AI Detection

The current struggle to reliably detect AI-generated content highlights a critical need for innovative detection techniques. The gap isn’t just a technological challenge; it’s a race against the ever-evolving sophistication of generative AI models. Closing this gap requires a multi-pronged approach, blending advanced algorithms with human ingenuity.

The future of generative AI detection hinges on several key advancements. These advancements will not only improve accuracy but also address the ethical and societal implications of undetected AI-generated content.

Advancements in Detection Techniques

Several promising avenues are being explored to enhance detection capabilities. These include the development of more robust statistical models that can identify subtle patterns and anomalies indicative of AI generation. Researchers are also investigating techniques that analyze the underlying structure and semantic coherence of text, going beyond simple stylistic analysis. Furthermore, advancements in machine learning, specifically in areas like adversarial machine learning and anomaly detection, hold immense potential for improving the accuracy and resilience of detection systems against increasingly sophisticated AI models. For example, imagine a system that not only analyzes word choice but also the subtle nuances of sentence construction, identifying patterns that deviate from human writing styles even when the text itself appears convincingly human. This would represent a significant leap forward in detection capabilities.

Watermarking and Embedded Signals

Incorporating watermarks or other imperceptible signals directly into the generated content is a promising strategy. These signals, invisible to the human eye, can act as unique identifiers, allowing for reliable verification of the content’s origin. This approach, akin to digital fingerprinting, could dramatically increase detection accuracy. Think of it like a hidden code embedded within the data itself, providing undeniable proof of AI authorship. For instance, a specific, statistically improbable sequence of characters or a subtle alteration of word frequencies could serve as a watermark, undetectable by a casual reader but easily identifiable by a sophisticated detection algorithm. This technique could significantly reduce false positives and negatives, creating a more reliable detection system.

The Role of Human Expertise

While automated systems are crucial, human expertise remains indispensable in fine-tuning and validating AI detection models. Human reviewers can identify patterns and nuances that algorithms may miss, providing valuable feedback for improving detection accuracy. Moreover, human judgment is essential in evaluating the ethical implications of detected content and determining appropriate responses. For instance, a human expert could review flagged content to assess its potential for harm or misuse, ensuring that detection systems are not used to censor legitimate content or unfairly target specific groups. This human-in-the-loop approach ensures that AI detection is not only accurate but also responsible and ethical.

A Future with Reduced Detection Gap

Imagine a future where AI-generated content is readily identifiable, not through intrusive measures, but through sophisticated, almost invisible watermarks and advanced detection algorithms. A journalist could use a simple software plugin to verify the authenticity of a news article before publishing, while educators could easily detect AI-generated essays submitted by students. Law enforcement could leverage these tools to identify and track the spread of AI-generated disinformation, effectively countering the misuse of this technology. This scenario, while currently aspirational, becomes increasingly plausible with ongoing advancements in detection techniques and the integration of human expertise. This would represent a significant shift in our relationship with generative AI, allowing for responsible innovation and mitigating the potential risks associated with its misuse.

Case Studies

Source: dotesports.com

The generative AI detection gap isn’t just a theoretical problem; it’s already causing real-world issues. Examining specific instances reveals the diverse ways this gap manifests and the significant consequences it can have. Let’s dive into some case studies to illustrate the point.

Analyzing real-world examples highlights the complexities and varying impacts of the detection gap. Sometimes, the consequences are relatively minor, while in other situations, the implications are far-reaching and potentially damaging.

The Case of the Misattributed Research Paper

A recent instance involved a seemingly groundbreaking research paper submitted to a prestigious scientific journal. The paper, utilizing impressive statistical modeling and data analysis, proposed a novel approach to a complex medical problem. Peer reviewers initially praised its originality and rigor. However, closer scrutiny, prompted by inconsistencies in the writing style compared to the authors’ previous publications, and a subsequent application of advanced AI detection tools, revealed the paper was largely AI-generated. The consequences were significant: the journal retracted the paper, damaging the reputations of the researchers involved and potentially delaying progress in the relevant field. This incident underscores the need for robust detection mechanisms, particularly in high-stakes academic settings where the integrity of research is paramount. The case also highlights the limitations of traditional peer review processes in identifying sophisticated AI-generated content.

Comparison with a Case of AI-Generated Misinformation

In contrast, consider the spread of AI-generated misinformation during a recent political campaign. A sophisticated chatbot, capable of mimicking human conversational styles, was used to disseminate false information across multiple social media platforms. Unlike the research paper case, this instance did not involve a formal submission process or direct attribution to a specific individual or entity. The detection of the AI-generated content proved significantly more challenging due to its dynamic and decentralized nature. While some instances were identified and flagged, many others likely went undetected, potentially influencing public opinion and electoral outcomes. This case highlights the challenge posed by the rapid dissemination of AI-generated content through social media and the limitations of current detection technologies in identifying and mitigating such threats in real-time.

Hypothetical Scenario: A Undetectable AI-Generated Ransomware Demand

Imagine a scenario where a sophisticated AI generates a ransomware demand so convincingly written and formatted that it completely bypasses all existing detection systems. The demand, personalized to the specific victim and their known vulnerabilities, is delivered via a seemingly legitimate email. The language is perfect, the tone is credible, and the accompanying technical details are accurate and plausible. Because the demand appears entirely human-generated, the victim readily complies, transferring a significant sum of money to the attacker’s untraceable cryptocurrency account. The lack of detection allows the attacker to operate undetected, leading to substantial financial losses for the victim and potentially compromising sensitive data. This hypothetical example illustrates the potential for catastrophic consequences when the detection gap allows malicious actors to leverage AI for criminal activities. The implications extend beyond simple financial loss to include potential breaches of national security or disruption of critical infrastructure.

Wrap-Up

Source: deltalogix.blog

The generative AI detection gap isn’t just a challenge; it’s a wake-up call. The arms race between AI creators and detection developers is far from over. While advancements in watermarking and other detection techniques offer hope, the need for human oversight and ethical considerations remains paramount. Ultimately, navigating this murky landscape requires a multi-pronged approach – technological innovation, critical thinking, and a renewed focus on digital literacy. The future depends on it.