Fast forward nsa warns us adversaries private data ai edge – Fast Forward: NSA Warns Us: Adversaries, Private Data, AI Edge. The AI revolution is hurtling forward, and with it, a chilling new reality: our private data is more vulnerable than ever. The National Security Agency isn’t mincing words—sophisticated adversaries are weaponizing artificial intelligence to breach our defenses, and the implications are staggering. This isn’t some far-off sci-fi scenario; it’s happening now, and understanding the threat is the first step towards survival.

From state-sponsored hackers to organized crime syndicates, the players are diverse, but their goal is the same: exploit AI’s power to steal, manipulate, and weaponize our most sensitive information. Think advanced phishing campaigns, AI-powered decryption tools, and autonomous systems designed to infiltrate our networks. The NSA’s warnings aren’t just cautionary tales; they’re a wake-up call, urging us to bolster our defenses before it’s too late. This race against time demands a multi-faceted approach, combining technological innovation with ethical considerations and strategic foresight.

The Accelerating Pace of AI Development and its Implications for National Security: Fast Forward Nsa Warns Us Adversaries Private Data Ai Edge

Source: f-secure.com

Fast forward: the NSA’s warning about adversaries leveraging AI to exploit private data is chillingly real. This race to secure information is mirrored in the tech world, where innovation thrives – check out the groundbreaking work happening in Spain, like those featured in this article on the hottest startups in Madrid in 2024. Ultimately, these Madrid-based companies, while innovative, also need to consider the NSA’s warnings and build security into their AI-driven solutions from the ground up.

The breakneck speed of AI development presents a double-edged sword. While offering incredible potential benefits, it simultaneously amplifies vulnerabilities in national security, particularly concerning the protection of private data. The sheer volume and complexity of data now accessible, coupled with increasingly sophisticated AI algorithms, create a perfect storm for malicious actors seeking to exploit weaknesses.

Rapid advancements in AI directly correlate with increased vulnerability of private data. AI systems, designed to learn and adapt, require massive datasets for training. This data, often containing sensitive personal and national security information, becomes a prime target for adversaries. The more powerful the AI, the more valuable this data becomes, creating a dangerous feedback loop. Sophisticated AI can automate the process of data breaches, making attacks more efficient and harder to detect.

AI-Enabled Data Breaches and Manipulation

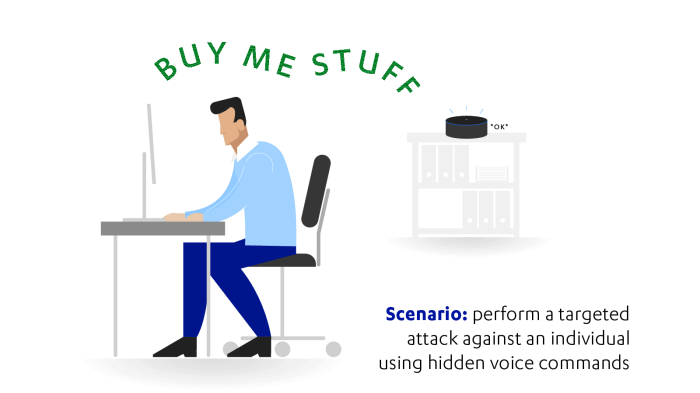

Adversaries can leverage AI’s capabilities in several ways to compromise national security. AI-powered tools can automate phishing attacks, creating highly personalized and convincing messages to trick individuals into revealing sensitive credentials. Deepfakes, synthetic media generated by AI, can be used to spread disinformation and sow discord, undermining public trust and potentially influencing policy decisions. Furthermore, AI can be used to analyze vast amounts of data to identify vulnerabilities in security systems, allowing for targeted attacks with a high probability of success. The ability of AI to adapt and learn from past failures makes it a particularly formidable threat.

AI Technologies Posing Significant National Security Risks

Several specific AI technologies present considerable risks. Advanced machine learning algorithms, particularly deep learning models, can be trained to identify and exploit patterns in complex systems, including national security infrastructure. Natural Language Processing (NLP) can be used to automate the creation of convincing disinformation campaigns or to analyze vast amounts of textual data to extract sensitive information. Generative Adversarial Networks (GANs), capable of generating realistic synthetic data, pose a significant threat due to their ability to create convincing deepfakes. Finally, the increasing sophistication of autonomous weapons systems powered by AI raises ethical and security concerns about potential unintended consequences and the potential for escalation of conflicts.

Hypothetical Scenario: AI-Enabled National Security Breach

Imagine a scenario where a sophisticated adversary uses AI to target a nation’s critical infrastructure. First, AI-powered tools analyze publicly available data to identify vulnerabilities in the power grid’s control systems. Then, AI-generated phishing emails, personalized to specific individuals within the power grid’s operational team, are deployed. Once access is gained, the adversary deploys AI-driven malware to disrupt operations, causing widespread power outages. This disruption is further amplified by a coordinated disinformation campaign utilizing AI-generated deepfakes to sow confusion and distrust in the government’s response. The entire attack, from initial reconnaissance to widespread disruption, is orchestrated and executed with unprecedented efficiency and precision thanks to AI.

NSA Warnings and the Nature of the Threats

The National Security Agency (NSA) has issued increasingly urgent warnings about the weaponization of artificial intelligence (AI) by malicious actors, highlighting a rapidly evolving threat landscape. These warnings aren’t abstract concerns; they point to concrete vulnerabilities in our data infrastructure and the potential for catastrophic damage to individuals, businesses, and national security. The speed at which AI capabilities are advancing outpaces the development of robust defensive measures, creating a critical window of vulnerability.

The NSA’s warnings underscore the fact that AI is no longer a futuristic threat; it’s a present-day reality being actively exploited. The agency’s public statements, though often veiled in general terms for national security reasons, consistently emphasize the need for proactive measures to mitigate the risks posed by AI-driven attacks. These risks are not limited to hypothetical scenarios; they represent a tangible and escalating danger.

Specific Examples of NSA Warnings Regarding AI-Related Threats to Private Data

While the NSA doesn’t publicly detail every specific threat, their general warnings consistently highlight the potential for AI to automate and scale various cyberattacks. For example, AI can be used to create highly sophisticated phishing campaigns, crafting personalized messages at an unprecedented scale to target individuals with tailored lures. Similarly, AI can be employed to rapidly identify and exploit vulnerabilities in software and systems, accelerating the pace of attacks and making traditional defensive measures less effective. The NSA’s warnings implicitly suggest that AI is being used to enhance the effectiveness of existing malicious activities, such as data breaches and espionage, by automating previously labor-intensive processes. The sheer speed and scale enabled by AI make these attacks exponentially more dangerous.

Types of Adversaries Posing the Greatest Risk

The threat spectrum is broad, encompassing both state-sponsored actors and non-state actors. State-sponsored groups possess significant resources and sophisticated capabilities, leveraging AI to conduct large-scale espionage operations, targeting critical infrastructure, and disrupting national security. Criminal organizations also pose a significant threat, using AI to automate financial fraud, identity theft, and other illicit activities, often at a scale previously unimaginable. The NSA’s warnings suggest that the lines between these actors are increasingly blurred, with some state-sponsored groups potentially providing AI tools or expertise to criminal organizations, amplifying the overall threat.

Key Vulnerabilities in Current Data Security Infrastructure that AI Exploits

Current data security infrastructure often struggles to keep pace with AI-driven attacks. Traditional security measures, such as firewalls and intrusion detection systems, are designed to detect known threats and patterns. However, AI’s ability to generate novel attack vectors and adapt to defensive measures renders these systems less effective. The sheer volume of data processed by AI-powered attacks can overwhelm traditional systems, making it difficult to identify malicious activity in real-time. Furthermore, AI can be used to bypass authentication mechanisms, exploit subtle vulnerabilities in software, and even create convincing deepfakes for social engineering attacks. The NSA’s implicit message is clear: a fundamental shift in our approach to cybersecurity is required.

Comparison of Threats Posed by AI versus Traditional Cyberattacks

AI-powered cyberattacks represent a significant evolution from traditional attacks. Traditional attacks often rely on brute force or known exploits, while AI-driven attacks leverage machine learning to adapt, learn, and evolve. This adaptability makes them far more difficult to detect and defend against. The scale of AI-powered attacks is also dramatically increased; AI can automate attacks across vast networks, targeting millions of individuals or systems simultaneously. While traditional attacks might focus on specific targets, AI-driven attacks can be far more indiscriminate, causing widespread disruption and damage. The NSA’s warnings imply that the qualitative difference between AI-driven and traditional attacks is as significant as the quantitative difference in scale and speed.

Protecting Private Data in the Age of AI

The NSA’s warnings about AI-driven attacks on private data aren’t just theoretical; they represent a rapidly evolving threat landscape. The sheer processing power of AI, coupled with its ability to learn and adapt, makes it a formidable weapon in the hands of malicious actors. Protecting our data requires a proactive, multi-layered approach, combining technological solutions with informed user behavior. This section Artikels strategies individuals and organizations can implement to safeguard their information in this increasingly complex environment.

Proactive Data Protection Measures

The following table details several mitigation strategies against AI-driven attacks, targeting common vulnerabilities and outlining practical implementation steps. The effectiveness column offers a general assessment; actual effectiveness will depend on the specific implementation and the sophistication of the attack.

| Mitigation Strategy | Target Vulnerability | Implementation Steps | Effectiveness |

|---|---|---|---|

| Multi-Factor Authentication (MFA) | Unauthorized Access | Implement MFA across all accounts, using a combination of methods like passwords, biometrics, and one-time codes. Regularly review and update authentication methods. | High – significantly reduces the risk of unauthorized access, even if credentials are compromised. |

| Data Encryption (at rest and in transit) | Data Breaches | Employ strong encryption algorithms (e.g., AES-256) for both data stored on systems and data transmitted over networks. Use encryption keys managed securely with robust key management systems. | High – renders data unintelligible to unauthorized parties, even if intercepted. |

| Regular Security Audits and Penetration Testing | System Vulnerabilities | Conduct regular security assessments to identify and address vulnerabilities in systems and applications. Employ penetration testing to simulate real-world attacks and identify weaknesses. | Medium to High – proactively identifies and addresses weaknesses before they can be exploited. |

| Employee Security Training | Phishing and Social Engineering | Provide employees with regular training on identifying and avoiding phishing attempts, social engineering tactics, and other common attack vectors. Establish clear security protocols and incident reporting procedures. | Medium to High – reduces the likelihood of human error contributing to data breaches. |

Robust Data Encryption Techniques

Implementing robust data encryption involves choosing strong algorithms and managing keys securely. For example, Advanced Encryption Standard (AES) with a 256-bit key is widely considered a strong encryption algorithm. However, the security of the encryption depends heavily on the security of the encryption key. Key management systems should employ secure key generation, storage, and rotation practices to mitigate the risk of key compromise. This might involve hardware security modules (HSMs) for storing keys and employing techniques like key splitting and threshold cryptography to prevent single points of failure. The challenge lies in balancing strong encryption with the need for efficient data access and processing, as AI-powered decryption attempts will likely target weaknesses in key management or encryption implementation.

AI-Based Data Breach Detection and Response, Fast forward nsa warns us adversaries private data ai edge

A system for detecting and responding to AI-based data breaches needs to be proactive and adaptable. This requires employing advanced threat detection systems that can analyze network traffic, system logs, and user behavior for anomalies indicative of a breach. Machine learning algorithms can be trained to identify patterns associated with known attack vectors and flag suspicious activity. The system should also include automated response capabilities, such as isolating compromised systems, blocking malicious traffic, and initiating incident response procedures. Human oversight remains crucial, however, to validate alerts and make critical decisions. Real-time threat intelligence feeds can further enhance the system’s effectiveness by providing up-to-date information on emerging threats.

Advanced Threat Detection Systems

Advanced threat detection systems (ATDS) play a vital role in mitigating AI-related security risks. These systems leverage machine learning, behavioral analysis, and other advanced techniques to identify sophisticated threats that traditional security tools might miss. ATDS can analyze vast amounts of data from various sources, identifying subtle anomalies that could indicate a breach in progress. Examples include detecting unusual access patterns, identifying malicious code, and recognizing data exfiltration attempts. By integrating with other security tools and providing real-time alerts, ATDS enable organizations to respond swiftly and effectively to AI-driven attacks, minimizing the impact of a breach.

The Ethical Considerations of AI and National Security

The rapid advancement of artificial intelligence (AI) presents unprecedented opportunities for national security, but also introduces a complex web of ethical dilemmas. The potential for AI to enhance surveillance, automate decision-making in warfare, and analyze vast datasets raises serious concerns about privacy, accountability, and the potential for bias. Balancing the benefits of AI-powered security with the imperative to uphold ethical principles is a critical challenge for governments and technology developers alike.

The use of AI in national security contexts necessitates a careful consideration of its impact on individual privacy. Facial recognition technology, predictive policing algorithms, and AI-driven surveillance systems, while potentially improving security, can also infringe on fundamental rights if not implemented and regulated responsibly. The collection and analysis of personal data, often without explicit consent, raises concerns about potential misuse and the chilling effect on freedom of expression and assembly. This delicate balance between security and liberty demands robust ethical frameworks and stringent regulations.

AI Regulation in National Security

Different nations are adopting diverse approaches to regulating AI in national security. Some favor a more permissive approach, focusing on promoting innovation while establishing broad ethical guidelines. Others prefer stricter regulations, emphasizing rigorous oversight and accountability mechanisms. The European Union, for instance, is leading the charge with its AI Act, which aims to classify AI systems based on their risk level and impose stricter requirements on high-risk applications. Meanwhile, the United States is pursuing a more fragmented approach, with various agencies and departments developing their own guidelines and regulations. This lack of a unified national strategy presents challenges in ensuring consistent ethical standards and effective oversight.

Bias in AI Systems and National Security

AI systems are trained on data, and if that data reflects existing societal biases, the AI will likely perpetuate and even amplify those biases. For example, facial recognition systems have been shown to be less accurate in identifying individuals with darker skin tones, potentially leading to misidentification and wrongful arrests. In national security applications, biased AI could lead to discriminatory outcomes, impacting the targeting of individuals or groups based on race, ethnicity, or other protected characteristics. This not only undermines the fairness and legitimacy of security operations but also erodes public trust in government institutions. Mitigating bias requires careful attention to data quality, algorithmic transparency, and rigorous testing and evaluation of AI systems before deployment.

A Framework for Ethical AI Development and Deployment

An ethical framework for AI development and deployment in national security should prioritize transparency, accountability, and human oversight. This framework should include mechanisms for ensuring data privacy and security, rigorous testing and evaluation of AI systems to identify and mitigate bias, and clear guidelines for human intervention in critical decision-making processes. Furthermore, establishing independent oversight bodies to monitor the use of AI in national security and investigate potential abuses is crucial. Open dialogue among policymakers, technologists, and civil society is essential to develop and implement ethical guidelines that effectively balance the need for national security with the protection of fundamental rights and freedoms. The development of robust explainable AI (XAI) techniques, which provide insights into the decision-making processes of AI systems, is also crucial for enhancing transparency and accountability.

The “AI Edge” and its Strategic Significance

Source: medium.com

The rapid advancement of artificial intelligence (AI) has fundamentally reshaped the global security landscape. No longer a futuristic concept, AI is a tangible force, offering significant advantages to nations and actors who master its deployment. This “AI edge” represents a new frontier in the competition for power, profoundly impacting the nature of conflict and the balance of power in the digital realm and beyond.

The integration of AI into cyberattacks provides adversaries with unprecedented capabilities. This strategic advantage translates into enhanced efficiency, scale, and sophistication in attacks, posing significant challenges to traditional defensive measures.

AI-Enhanced Cyberattack Capabilities

AI significantly amplifies the effectiveness of cyberattacks. For instance, AI-powered malware can autonomously adapt to changing security environments, making it significantly harder to detect and neutralize. AI algorithms can also analyze vast amounts of data to identify vulnerabilities in systems far more quickly than human analysts, allowing for targeted and highly effective attacks. Furthermore, AI can automate the process of launching attacks at scale, overwhelming defenses and maximizing damage. Consider a scenario where AI identifies a zero-day vulnerability in a critical infrastructure system. Traditional methods might take days or weeks to discover and patch this. However, an AI-powered attack could exploit this vulnerability within hours, causing widespread disruption.

The AI Edge and the Balance of Power

The “AI edge” dramatically shifts the balance of power in national security. Nations and organizations possessing advanced AI capabilities gain a distinct advantage in both offensive and defensive cybersecurity operations. This asymmetry can create instability, as less technologically advanced actors may find themselves increasingly vulnerable to sophisticated AI-driven attacks. The ability to predict and preempt attacks, as well as to rapidly adapt to new threats, becomes a crucial determinant of national security strength. This is analogous to the shift in military power brought about by the development of nuclear weapons – possession of the technology grants a significant advantage.

Offensive and Defensive Applications of AI in Cybersecurity

AI’s dual-use nature is a defining characteristic of this new era. On the offensive side, AI can be used to develop more sophisticated malware, automate phishing campaigns, and conduct large-scale denial-of-service attacks. Defensively, AI can enhance threat detection, improve incident response times, and automate security patching. AI-powered systems can analyze network traffic in real-time, identifying malicious activity and responding accordingly. This creates a dynamic arms race, with both sides constantly seeking to develop more advanced AI capabilities to maintain a strategic edge.

Strategic Implications of the AI Edge: A Hypothetical Scenario

Imagine a hypothetical conflict between two nations, Nation A and Nation B. Nation A possesses significantly more advanced AI capabilities than Nation B. A visual representation of this would show Nation A’s cyber defenses as a robust, multi-layered system, constantly adapting and learning through AI-driven analysis. This system would be represented as a complex network of interconnected nodes, constantly shifting and reinforcing itself based on incoming threat data. In contrast, Nation B’s defenses would appear static and vulnerable, easily overwhelmed by Nation A’s AI-powered attacks. Nation A’s offensive capabilities would be represented by agile, adaptable “attack vectors” – AI-driven malware and automated exploitation tools – constantly probing and exploiting weaknesses in Nation B’s systems. The result would be a swift and decisive victory for Nation A, highlighting the strategic dominance afforded by a superior AI capability. This scenario illustrates how the AI edge can lead to asymmetrical warfare, where the technological advantage translates directly into strategic dominance.

Ultimate Conclusion

Source: ieee.org

The NSA’s warnings about the AI edge in the cyberwarfare landscape should be taken seriously. The speed of AI development outpaces our current security measures, leaving us vulnerable to increasingly sophisticated attacks. Protecting our private data in this new era demands a proactive approach, encompassing robust encryption, advanced threat detection systems, and a comprehensive understanding of the ethical implications of AI in national security. The future of data security hinges on our ability to adapt and innovate, staying one step ahead of those who would exploit the power of AI for malicious purposes. The stakes are high, and the race is on.