AI physical intelligence machine learning isn’t just another tech buzzword; it’s the dawn of a new era where artificial intelligence transcends the digital realm and interacts with the physical world in unprecedented ways. Imagine robots that learn and adapt like humans, seamlessly navigating complex environments and performing intricate tasks. This isn’t science fiction; it’s the exciting reality shaping our future, blending the power of machine learning with the dexterity of physical embodiment.

This exploration dives deep into the core components of AI physical intelligence, examining how machine learning algorithms empower these systems to perceive, learn, and act. We’ll unpack the challenges, explore the ethical considerations, and peer into the crystal ball of future applications across healthcare, manufacturing, and beyond. Buckle up, because the journey into the world of AI physical intelligence is about to begin.

Defining AI Physical Intelligence

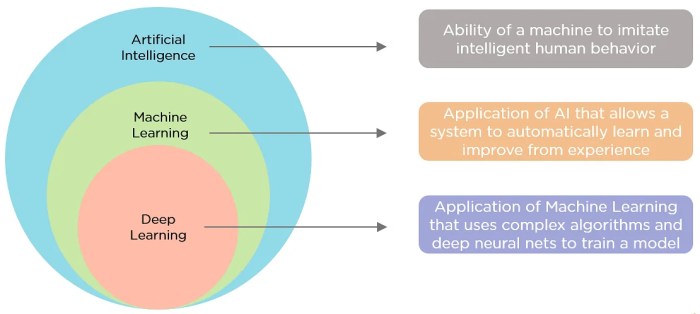

Source: simplilearn.com

AI, for a long time, has been largely confined to the digital realm – crunching numbers, analyzing data, and making predictions within the confines of a computer. But a new frontier is emerging: AI Physical Intelligence. This isn’t just about software; it’s about imbuing machines with the ability to understand and interact with the physical world in a way that’s both intelligent and adaptable. Think less about a computer program and more about a robot that truly *understands* its environment and acts accordingly.

AI physical intelligence blends the power of artificial intelligence with the dexterity and sensory capabilities of robots. Unlike purely software-based AI, which operates solely within a digital environment, AI physical intelligence involves the direct interaction of AI algorithms with the physical world through sensors, actuators, and physical embodiment. This allows for a level of responsiveness and adaptability that surpasses traditional robotic systems.

Real-World Applications of AI Physical Intelligence

AI physical intelligence is already transforming various industries. Consider Boston Dynamics’ robots, capable of navigating complex terrains, opening doors, and even performing acrobatic feats. These aren’t pre-programmed movements; they’re the result of AI algorithms learning and adapting to the physical world in real-time. Self-driving cars are another prime example, constantly processing sensor data (from cameras, lidar, and radar) to make split-second decisions about navigation and obstacle avoidance. In manufacturing, AI-powered robotic arms are learning to perform increasingly complex tasks with greater precision and flexibility than ever before, adapting to variations in materials and workflow. The application of AI physical intelligence extends to surgery, where robotic systems guided by AI can perform minimally invasive procedures with enhanced accuracy.

Key Components and Technologies Enabling AI Physical Intelligence

Several key components work together to create AI physical intelligence. First, we need sophisticated sensors, like cameras, lidar, and tactile sensors, to provide a rich understanding of the environment. This sensory data is then processed by powerful AI algorithms, often leveraging deep learning techniques, to interpret the information and make decisions. These algorithms need to be robust enough to handle noisy data and unexpected situations. Actuators, such as motors and robotic limbs, translate the AI’s decisions into physical actions. Finally, a robust control system is crucial to ensure safe and effective interaction with the physical world. This often involves techniques like reinforcement learning, allowing the AI to learn optimal control strategies through trial and error. Cloud computing also plays a significant role, providing the computational power needed for complex AI algorithms and enabling data sharing across multiple systems.

Comparison of AI Physical Intelligence and Traditional Robotics

| Component | AI Physical Intelligence | Traditional Robotics | Differences |

|---|---|---|---|

| Control System | Adaptive, learning-based control (e.g., reinforcement learning) | Pre-programmed, rule-based control | AI Physical Intelligence systems adapt to unforeseen circumstances, while traditional robots rely on pre-defined instructions. |

| Perception | Advanced sensor fusion and deep learning for robust environmental understanding | Limited sensor capabilities, often relying on simple sensors and pre-defined object recognition | AI Physical Intelligence offers a much richer understanding of the environment, allowing for more complex interactions. |

| Adaptability | High adaptability to changing environments and tasks | Low adaptability; requires reprogramming for new tasks | AI Physical Intelligence systems can learn and adapt to new situations without human intervention, unlike traditional robots. |

| Decision-Making | Autonomous decision-making based on real-time data analysis | Limited autonomy; decisions are largely pre-programmed | AI Physical Intelligence enables robots to make more complex and nuanced decisions in real-time. |

The Role of Machine Learning in AI Physical Intelligence

Machine learning is the engine driving the progress of AI physical intelligence. It allows these systems to learn from data, adapt to new situations, and improve their performance over time without explicit programming for every scenario. This ability is crucial for creating robots and other physical systems that can operate effectively in complex and unpredictable real-world environments. The sophistication of these systems hinges heavily on the types and application of machine learning algorithms employed.

Various machine learning algorithms are instrumental in enhancing the capabilities of AI physical intelligence systems. The choice of algorithm often depends on the specific task and the nature of the available data. The iterative process of data collection, model training, and performance evaluation is fundamental to the development and improvement of these systems.

Reinforcement Learning in Adaptive AI Physical Intelligence

Reinforcement learning (RL) is particularly crucial for developing adaptive and robust AI physical intelligence. Unlike supervised learning which relies on labeled data, RL allows AI agents to learn through trial and error. The agent interacts with an environment, receives rewards or penalties for its actions, and learns an optimal policy to maximize cumulative rewards. This makes RL ideal for tasks requiring complex decision-making and continuous adaptation, such as robot navigation, manipulation, and control. For instance, a robot learning to walk using RL might initially stumble frequently, but through repeated attempts and feedback from its sensors, it progressively refines its gait, achieving greater stability and efficiency. The reward system might incentivize upright posture and forward progress, penalizing falls and inefficient movements.

Supervised Learning in AI Physical Intelligence

Supervised learning techniques are employed when labeled data is available. This means that each data point is paired with a corresponding output or label. For example, in robotic grasping, a supervised learning model could be trained on images of objects paired with the corresponding robotic hand configurations needed to grasp them successfully. The model learns to map image features to grasping actions, enabling the robot to grasp new, unseen objects with a high degree of accuracy. Another example involves training a model to predict the trajectory of a moving object based on sensor data, enabling a robot to effectively interact with its dynamic environment.

Unsupervised Learning in AI Physical Intelligence

Unsupervised learning is valuable when labeled data is scarce or unavailable. Clustering algorithms, for instance, can be used to group similar sensor readings or robot behaviors, revealing underlying patterns and structures in the data. This can help identify anomalies, optimize robot control strategies, or even discover new features for supervised learning models. Consider a robot exploring an unknown environment. Unsupervised learning could help it segment the environment into distinct regions based on sensor data, facilitating more efficient navigation and exploration. Anomaly detection techniques, a subset of unsupervised learning, can also be used to identify malfunctions or unexpected events during robot operation.

A Typical Machine Learning Pipeline in AI Physical Intelligence

The process of applying machine learning to AI physical intelligence typically involves a structured pipeline. Understanding this pipeline is crucial for developing and deploying effective systems.

Imagine a flowchart with these stages:

1. Data Acquisition: Sensors collect data (images, force readings, joint angles etc.).

2. Data Preprocessing: Data is cleaned, filtered, and transformed to improve model performance (noise reduction, feature extraction).

3. Model Selection: An appropriate machine learning algorithm is chosen based on the task and data (e.g., RL for control, supervised learning for classification).

4. Model Training: The model is trained using the preprocessed data. This involves adjusting the model’s parameters to minimize error and improve accuracy.

5. Model Evaluation: The model’s performance is evaluated using metrics relevant to the task (accuracy, precision, recall, reward).

AI’s physical intelligence is rapidly evolving, thanks to breakthroughs in machine learning. This progress isn’t just theoretical; it’s shaping real-world applications, like the conversational AI chatbots Meta’s building for Instagram, as detailed in this article: instagram meta creator built ai chatbots. Ultimately, this points towards a future where AI’s physical capabilities are as sophisticated as its digital ones, blurring the lines between the virtual and the real.

6. Deployment and Monitoring: The trained model is deployed on the physical system, and its performance is continuously monitored and refined.

This iterative process, often involving feedback loops, ensures continuous improvement and adaptation of the AI physical intelligence system.

Challenges and Limitations of AI Physical Intelligence

AI physical intelligence, while promising a future of incredible automation and efficiency, faces significant hurdles before widespread adoption becomes a reality. These challenges span technological limitations, ethical considerations, and practical constraints, each demanding careful consideration and innovative solutions. Overcoming these obstacles is crucial for unlocking the full potential of this transformative technology.

Technological Hurdles

Developing robust and reliable AI physical intelligence systems requires overcoming several complex technological barriers. Current AI models often struggle with the unpredictable nature of the real world, lacking the adaptability and robustness needed to handle unexpected situations or environmental changes. For instance, a robot designed for warehouse operations might struggle to navigate a cluttered aisle or adapt to a sudden power outage. Another significant challenge lies in the development of advanced sensors and actuators that provide the necessary precision, dexterity, and responsiveness for complex tasks. The creation of truly versatile robotic hands capable of manipulating objects with the dexterity of a human hand remains a significant research area. Finally, efficient and reliable communication protocols are needed to enable seamless interaction between multiple AI physical intelligence systems, particularly in collaborative tasks.

Safety and Ethical Considerations

Deploying AI physical intelligence systems raises significant safety and ethical concerns across various environments. In industrial settings, malfunctions could lead to accidents, injuries, or damage to equipment. Imagine a robotic arm malfunctioning during an assembly line operation, causing a safety hazard for nearby workers. In autonomous vehicles, the ethical dilemmas surrounding accident avoidance algorithms require careful consideration and robust safety protocols. For example, should a self-driving car prioritize the safety of its passengers over pedestrians in an unavoidable accident scenario? Furthermore, the potential for misuse of AI physical intelligence systems, such as in autonomous weapons systems, necessitates stringent regulations and ethical guidelines to prevent harm. The transparency and accountability of decision-making processes within these systems are also crucial ethical considerations.

Power Consumption and Computational Requirements

AI physical intelligence systems are computationally intensive, requiring significant processing power to handle real-time data processing, sensor integration, and complex control algorithms. This leads to high energy consumption, which can be a major limitation for mobile robots or systems operating in remote locations. For example, a large-scale robotic system for disaster relief might require substantial power sources, limiting its deployment capabilities. Moreover, the need for high-bandwidth communication to transmit and process large volumes of sensor data adds to the computational burden and energy requirements. Developing more energy-efficient hardware and algorithms is crucial to overcome these limitations and broaden the applicability of AI physical intelligence.

Potential Risks Associated with Malfunctioning Systems

The potential risks associated with malfunctioning or uncontrolled AI physical intelligence systems are substantial and multifaceted. Unintended actions due to software bugs, sensor failures, or unexpected environmental conditions could lead to property damage, injuries, or even fatalities. Consider a scenario where a robotic surgical assistant malfunctions during an operation, leading to complications for the patient. Furthermore, the potential for adversarial attacks, where malicious actors attempt to compromise the system’s functionality, poses a significant security risk. For example, hackers could potentially gain control of an autonomous vehicle, leading to dangerous consequences. Robust safety mechanisms, including fail-safe protocols and security measures, are critical to mitigate these risks.

Future Trends and Applications

AI physical intelligence (API) is poised for explosive growth over the next decade, blurring the lines between the digital and physical worlds. We’re moving beyond simple automation towards systems capable of sophisticated perception, reasoning, and manipulation in dynamic environments. This will revolutionize industries and reshape our daily lives in ways we’re only beginning to imagine.

The next ten years will witness significant advancements in API’s ability to learn and adapt more quickly and efficiently. Expect to see a dramatic increase in the complexity of tasks API can handle, moving from relatively simple, structured environments to complex, unstructured real-world scenarios. This will be fueled by breakthroughs in machine learning algorithms, particularly in reinforcement learning and deep learning, allowing APIs to learn from experience and generalize their knowledge to new situations. Increased computational power and the proliferation of edge computing will also play a crucial role, enabling faster processing and real-time responses.

Innovative Applications in Healthcare

API’s impact on healthcare will be transformative. Imagine surgical robots performing minimally invasive procedures with unparalleled precision, guided by AI that can adapt to unforeseen circumstances. We can also envision AI-powered prosthetics that respond intuitively to the user’s intentions, providing a seamless and natural experience. Furthermore, API-driven diagnostic tools could analyze medical images and patient data with greater accuracy and speed than human doctors, leading to earlier and more effective treatments. For example, a robotic system could perform complex microsurgery, autonomously navigating delicate tissues with far greater precision than a human surgeon, reducing the risk of complications and improving patient outcomes.

Innovative Applications in Manufacturing

In manufacturing, API will optimize production lines and improve quality control. Robots equipped with advanced sensors and AI algorithms will be able to handle a wider range of tasks, collaborating with human workers to assemble products with greater speed and efficiency. AI-powered predictive maintenance systems will minimize downtime by identifying potential equipment failures before they occur. Consider a factory floor where robots work alongside humans, seamlessly adapting to changing production demands. The AI system can optimize the workflow in real-time, adjusting the robot’s actions based on the quality of parts being produced and the current workload. This leads to increased efficiency, reduced waste, and higher quality products.

Innovative Applications in Exploration

API will unlock new possibilities in exploration, whether it’s exploring the depths of the ocean or the surface of Mars. Autonomous robots equipped with advanced sensors and AI algorithms can navigate challenging environments, collect data, and perform tasks that would be too dangerous or difficult for humans. For example, a fleet of underwater robots, guided by API, could map the ocean floor, identify new species, and monitor environmental changes with unprecedented efficiency. Similarly, robots equipped with advanced AI and capable of self-repair could explore distant planets, autonomously collecting samples and conducting scientific experiments.

The Impact of Advancements in Materials Science and Sensor Technology

Advancements in materials science will be critical to the future of API. The development of lighter, stronger, and more durable materials will enable the creation of more robust and agile robots capable of operating in harsh environments. Similarly, breakthroughs in sensor technology will provide API with richer and more detailed information about its surroundings, enabling it to make more informed decisions and perform more complex tasks. For instance, the development of flexible, biocompatible sensors could enable the creation of more sophisticated medical robots capable of interacting with the human body in a minimally invasive way. The development of new, highly sensitive sensors will also enable API to perceive and respond to subtle changes in the environment, making it even more adaptable and responsive.

Case Studies of Successful AI Physical Intelligence Implementations

Source: marutitech.com

AI physical intelligence, the ability of machines to perceive, reason, and act in the physical world, is rapidly moving beyond theoretical concepts and into real-world applications. Several successful deployments across diverse sectors demonstrate its transformative potential. These case studies highlight the key technologies, challenges overcome, and the positive outcomes achieved.

Boston Dynamics’ Spot Robot in Industrial Inspection

Spot, Boston Dynamics’ quadrupedal robot, represents a significant advancement in AI physical intelligence. Its success stems from a combination of sophisticated locomotion algorithms, advanced sensor integration, and robust machine learning capabilities for navigation and task execution.

- Key Features: 360° vision, robust mobility in challenging terrains, remote operation, payload carrying capacity, integration with various sensors (e.g., thermal cameras, gas detectors).

- Challenges: Developing robust algorithms for autonomous navigation in unstructured environments, ensuring reliable operation in unpredictable conditions, managing power consumption for extended deployments.

- Outcomes: Improved safety for human inspectors in hazardous environments, increased efficiency in data collection, reduced inspection time and costs, enabling remote inspection of geographically challenging locations.

The underlying technology relies on a combination of computer vision for object recognition and path planning, reinforcement learning for adaptive locomotion, and cloud-based data processing for remote control and analysis.

Autonomous Driving Systems in Self-Driving Cars

The development of self-driving cars exemplifies the complex interplay of AI physical intelligence and machine learning. Companies like Tesla and Waymo have invested heavily in developing systems that enable autonomous navigation, object detection, and decision-making in dynamic environments.

- Key Features: LiDAR, radar, and camera sensors for environmental perception, deep learning models for object detection and classification, sophisticated control algorithms for safe and efficient driving, real-time decision-making capabilities.

- Challenges: Handling unexpected events (e.g., pedestrians suddenly crossing the street), ensuring system safety and reliability in diverse weather conditions, addressing ethical dilemmas in accident avoidance scenarios, navigating complex traffic situations.

- Outcomes: Improved road safety (in controlled environments), increased traffic efficiency, enhanced accessibility for individuals with disabilities, potential for reduced congestion and fuel consumption.

These systems leverage deep convolutional neural networks (CNNs) for image processing, recurrent neural networks (RNNs) for sequential data processing, and reinforcement learning for optimizing driving strategies.

Surgical Robots Assisted by AI

AI physical intelligence is revolutionizing the field of surgery through the development of robotic surgical systems that assist surgeons with precision and dexterity. These systems use AI to enhance the surgeon’s capabilities, leading to improved surgical outcomes.

- Key Features: Haptic feedback, miniature robotic arms with high degrees of freedom, image-guided navigation, AI-powered tools for tissue recognition and segmentation, real-time monitoring of surgical progress.

- Challenges: Ensuring system safety and reliability, developing AI algorithms that can accurately interpret complex surgical scenes, managing the human-robot interaction, addressing ethical considerations related to autonomous surgical actions.

- Outcomes: Minimally invasive procedures, improved surgical precision, reduced recovery times, enhanced patient outcomes.

The technology integrates advanced computer vision techniques, machine learning for surgical task automation, and haptic feedback systems for enhanced surgeon control.

Architectural Representation of a Successful AI Physical Intelligence System, Ai physical intelligence machine learning

Imagine a layered architecture. The bottom layer comprises various sensors (cameras, LiDAR, IMUs) providing raw data about the environment. This data is processed by a perception layer using computer vision and sensor fusion algorithms to create a rich representation of the surroundings. A planning layer uses this information to determine actions, leveraging algorithms like path planning and motion control. A control layer translates these plans into commands for actuators (motors, grippers). A feedback loop continuously monitors the system’s performance, using machine learning to adapt and improve its behavior over time. This architecture enables the system to learn from its experiences, improving its efficiency and robustness.

Concluding Remarks: Ai Physical Intelligence Machine Learning

Source: ac.in

The convergence of AI, machine learning, and physical embodiment is not just a technological leap; it’s a paradigm shift. AI physical intelligence is poised to revolutionize industries, solve complex problems, and reshape our interaction with technology. While challenges remain – ethical considerations, power consumption, and safety – the potential benefits are too significant to ignore. The future is here, and it’s moving, learning, and adapting.